Machine Learning / Deep Learning

Machine Learning / Deep Learning

Signal understanding and perception pipelines that combine CNNs, Transformers, and multimodal fusion to support sleep staging, activity recognition, and embedded inference.

- Sleep/ECG interpretation for wearable healthcare

- Multimodal human-action and sensor fusion models

- Edge-ready architectures with knowledge distillation

Research Area Overview

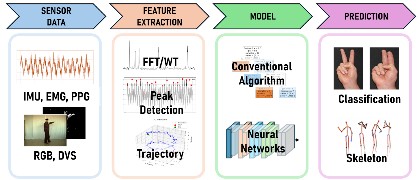

We develop advanced deep learning systems that respond to the market’s demand for reliable AI across computer vision, biosignal processing, and natural language tasks. Where traditional models fall short, we engineer multi-stream architectures that fuse RGB, depth, IMU, and physiological measurements to achieve robust perception on edge hardware.

Deep Learning

Deep learning represents the most impactful wave of neural-network research. Our lab builds models that push state-of-the-art performance across computer vision, biosignals, and multi-modal fusion—while also prioritizing interpretability for clinical partners. From sleep-state estimation to ECG analytics, we refine both the architecture and the deployment toolchain.

Artificial Intelligence

Moving beyond any single algorithm, we explore AI as the combination of machine learning, knowledge modeling, and embedded reasoning. Neural networks provide groundbreaking solutions across industries, and we extend them with biologically inspired mechanisms drawn from neuromorphic research. This bridge allows us to design models that are both accurate and energy-aware.

Data Mining

Many of our deliverables begin with large-scale data preparation. We engineer pipelines that ingest heterogeneous sensor feeds, text corpora, and biomedical records, then apply pattern-mining techniques to extract actionable features. These insights feed directly into our ML/DL stacks and digital healthcare applications.

Representative Topics

- Multi-modal perception (RGB, depth, event-based, and wearable sensors)

- Explainable sleep staging and biosignal interpretation

- Lightweight architectures and AutoML for embedded deployment

- Feature engineering and knowledge distillation for small datasets